Workflow Orchestrator

Multi-step data workflows without code. Chain SQL queries, notebooks, Python scripts—event-driven orchestration, fully managed, no infrastructure.

Multi-step workflows—SQL, notebooks, Python—event-driven, fully managed

Data orchestration without devops

Multi-step workflows, event-driven execution, SQL-first configuration—no infrastructure required.

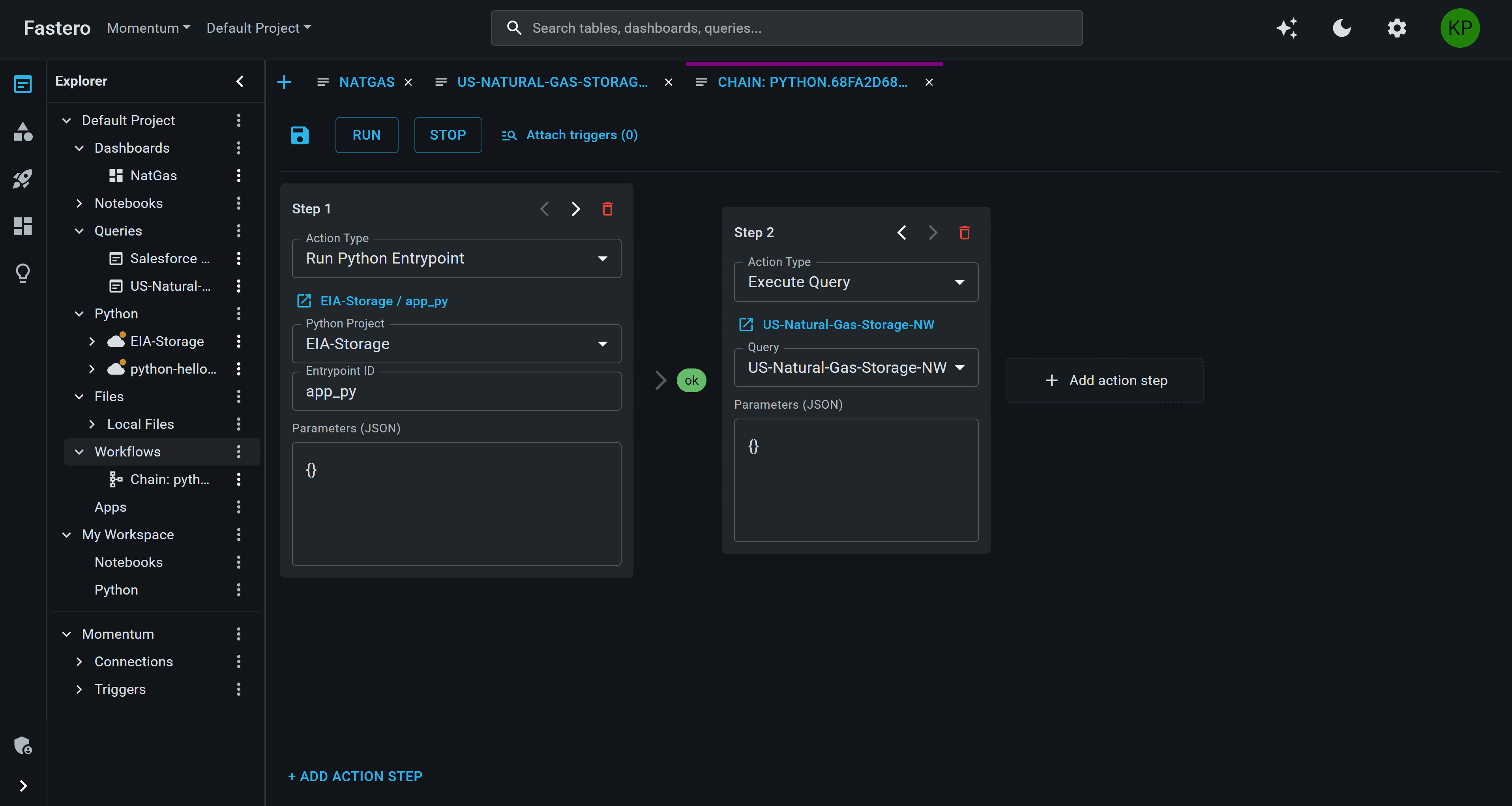

Multi-Step Workflows

Chain SQL queries, notebooks, Python scripts into pipelines. Conditional logic, branching, asset chaining—complex workflows made simple.

Event-Driven Execution

Triggered by CDC, Kafka, webhooks, schedules. Real-time workflows react to data changes—no batch processing delays.

SQL-First Configuration

No Python DAGs required. Configure workflows with SQL conditions, UI forms—data team friendly, not devops.

Zero Infrastructure

Fully managed workflow engine. No Airflow servers, no Kubernetes—focus on logic, not infrastructure.

Asset Workflows

Auto-generate workflows from queries, notebooks. Query → Transform → Load pipelines—one-click orchestration.

Execution Logs & Audit

Every workflow run tracked. Step-by-step logs, success/failure status, retry failed runs—complete visibility.

Why teams choose Fastero for orchestration

No Python DAGs. Event-driven, not batch. Fully managed. SQL-first.

Orchestration Without Code

No Python DAGs, no YAML manifests. Configure workflows via UI—SQL-first for data teams, no devops required.

Event-Driven, Not Batch

Workflows triggered by CDC, Kafka events fire instantly—real-time data pipelines, not hourly batch jobs.

Fully Managed Infrastructure

No Airflow servers, no Kubernetes setup. Workflow engine scales automatically—zero infrastructure overhead.

Complete Execution Visibility

Workflow logs track every run. See which step failed, why, when—debug faster with step-by-step execution history.

Multi-Step Workflow Example

Execute Query

Run SQL: SELECT * FROM orders WHERE amount > 10000

Conditional Branch

If result_count > 0 → proceed to Step 3, else → end workflow

Run Downstream Workflow

Trigger workflow: notify_sales_team with high-value order data

How it works

Chain queries, notebooks, Python—conditional logic, branching—triggered by CDC, Kafka, schedules.

SQL-First Orchestration

Chain SQL queries, notebooks, Python scripts. No Python DAGs—configure workflows with SQL conditions, UI forms.

Event-Driven Execution

Workflows triggered by CDC, Kafka, webhooks, schedules. Real-time automation—no batch delays.

Fully Managed, No Servers

No Airflow infrastructure. Workflow engine scales automatically—focus on data logic, not devops.

Real-world use cases

Data Engineer

ETL Pipeline Automation

Workflow: Extract from Snowflake → Transform with Python → Load to BigQuery → Trigger downstream dashboards. Fully automated, event-driven.

Analytics Engineer

Daily Reporting Workflow

Schedule workflow runs daily at 9am: Execute revenue query → Export to CSV → Email to executives. No manual exports.

ML Engineer

Model Retraining Pipeline

CDC trigger on training_data table → Run notebook (retrain model) → Deploy new version → Update model registry. Automated ML ops.

Common questions

Do I need to write Python DAGs?

No. Workflows are configured via UI forms with dropdowns and SQL conditions. No Python required—SQL-first for data teams.

What can workflows orchestrate?

Chain SQL queries, Jupyter notebooks, Python scripts into multi-step pipelines. Add conditional logic (if/else), trigger downstream workflows, send webhooks.

How are workflows triggered?

Triggered by CDC (data changes), Kafka events, HTTP webhooks, or cron schedules. Event-driven execution—workflows run automatically when data changes.

Can I see workflow execution logs?

Yes—workflow logs show step-by-step execution with timestamps, duration, success/failure status, and error messages. Retry failed runs from the UI.

How do workflows handle failures?

Failed workflows are logged with error details. Retry failed runs manually. Each step shows success/failure status for easy debugging.

What is an asset workflow?

Auto-generated workflows from queries, notebooks, or Python projects. One-click orchestration—turn any asset into a workflow step.

Ready to automate your data workflows?

Multi-step orchestration. Event-driven execution. SQL-first configuration. Fully managed infrastructure—start free, no credit card required.